Image Source: Microsoft

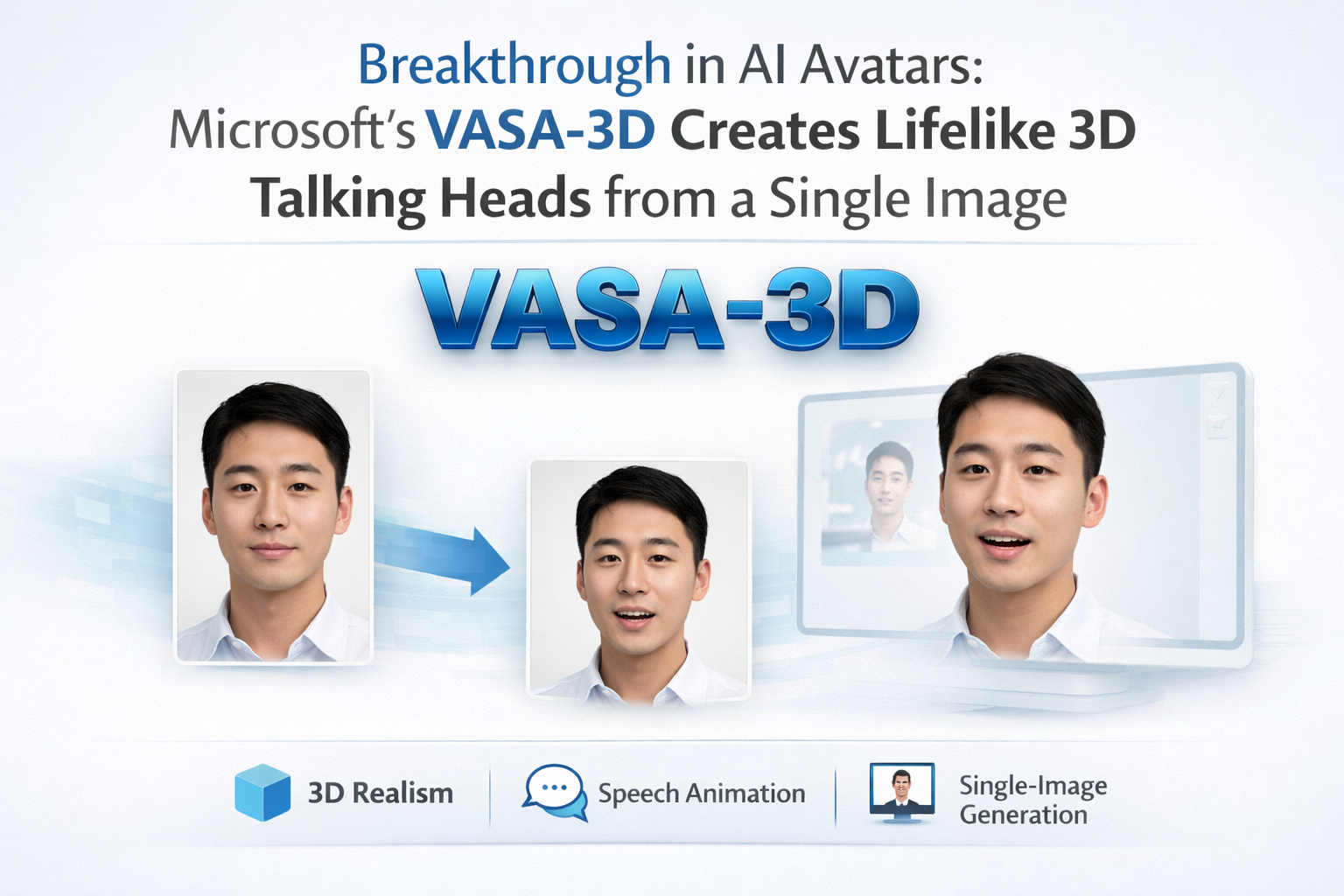

December 18, 2025 – In a major leap for AI-driven avatars and 3D facial animation, Microsoft Research Asia has unveiled VASA-3D, a cutting-edge framework that generates highly realistic, audio-driven 3D talking head avatars using just one portrait photo and an audio clip. Presented at NeurIPS 2025, this innovation builds on the viral success of the earlier VASA-1 model, extending photo realistic 2D talking faces into fully renderable 3D space.

What Makes VASA-3D a Game-Changer for AI Avatars?

Traditional 3D talking head generation often requires multi-view images, lengthy video training data, or complex rigging—making it impractical for quick personalization. VASA-3D solves this with a single-image approach, delivering expressive, viewpoint-free avatars that sync perfectly with speech.

Key highlights:

- Real-time performance: Generates 512×512 videos at up to 75 FPS from any camera angle.

- Superior expressiveness: Captures subtle facial nuances, natural head movements, and precise lip synchronization far better than existing methods.

- One-shot personalization: No need for 3D scans or extensive video footage—just one static photo.

How VASA-3D Works: Technical Breakdown

The system cleverly combines 3D Gaussian splatting—a popular technique for efficient neural rendering—with a parametric head model called FLAME (for structured geometry).

- Motion from Pretrained 2D Model: It taps into the rich motion latent space of the original VASA-1 (trained on thousands of high-quality 2D talking face videos) to extract dynamic expressions.

- 3D Gaussian Representation: The head is modeled as deformable 3D Gaussians anchored to the FLAME mesh, allowing rigid head poses while adding freeform deformations for fine details like wrinkles, eye blinks, and mouth shapes.

- Audio-to-Motion Pipeline: Audio features drive the latent space, producing coherent animations that look authentically human.

This hybrid approach ensures both structural accuracy and the fluid, lifelike dynamics that made VASA-1 famous—now in full 3D.

Why VASA-3D Outperforms Existing 3D Avatar Technologies

Compared to prior audio-driven 3D avatar systems (e.g., those relying on diffusion models or neural radiance fields), VASA-3D excels in:

- Photorealism and temporal consistency: Fewer artifacts, smoother motions.

- Efficiency: Real-time inference on consumer hardware.

- Accessibility: Single-image input democratizes high-end avatar creation for VR/AR, gaming, virtual meetings, and content creation.

Early demos show avatars that are eerily lifelike, raising the bar for generative AI in virtual humans.

Potential Applications and Future Impact

VASA-3D opens doors to:

- Immersive metaverse avatars and telepresence.

- Personalized AI assistants with natural faces.

- Enhanced video dubbing and character animation in film/gaming.

- Accessibility tools for speech-impaired users.

As Gaussian splatting and audio-driven AI continue trending in 2025 research, expect rapid adoption and extensions (e.g., full-body or hair integration).

Limitations Noted in the Paper

The current version focuses on head-only avatars (no torso or detailed hair) and was evaluated on controlled virtual identities. Real-world robustness across diverse ethnicities, lighting, and accents remains an area for future improvement.

Read the full paper: arXiv 2512.14677 and VASA 3d Microsoft

Project page & demos: Available via Microsoft Research (coming soon post-NeurIPS).

Stay tuned to AI News for more coverage on NeurIPS 2025 breakthroughs, 3D AI avatars, and the latest in generative AI research. What do you think—will tools like VASA-3D redefine virtual communication in 2026? Drop your thoughts below!