December 18, 2025 – A new research paper introduces a hierarchical hybrid AI framework that combines deep reinforcement learning (RL) with traditional scripted agents to create more intelligent, adaptable behaviors in combat simulations and wargaming. This innovative method addresses longstanding challenges in military AI training, offering superior performance in dynamic, unpredictable scenarios.

Published on arXiv (2512.00249) and presented at the 2025 Interservice/Industry Training, Simulation, and Education Conference (I/ITSEC), the work by Scotty Black and colleagues demonstrates how blending these approaches outperforms standalone scripted or RL systems.

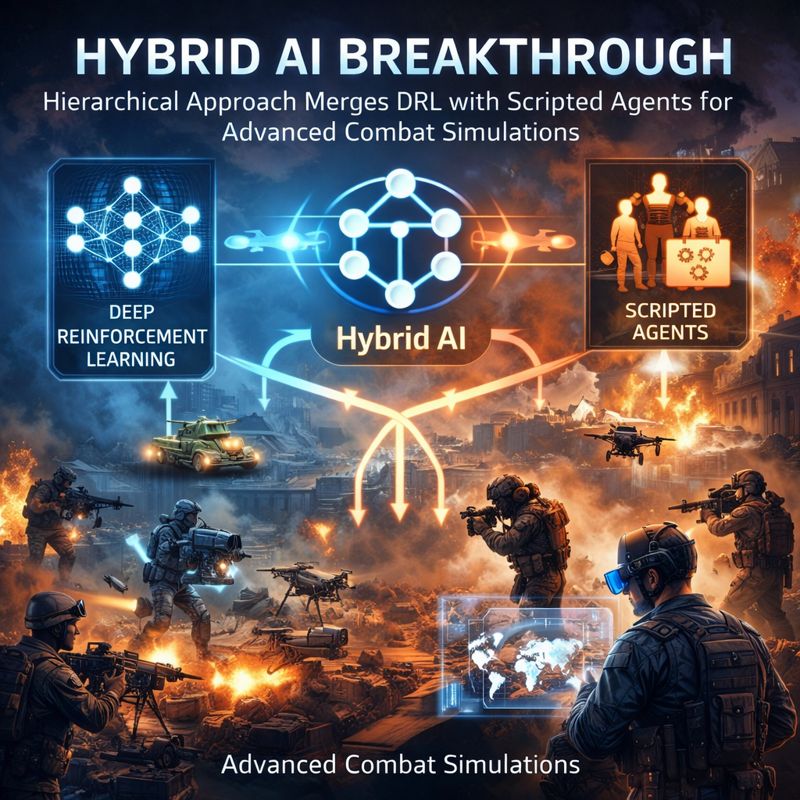

(Illustrative visuals of military wargaming simulations powered by AI agents, highlighting strategic decision-making in complex environments.)

The Problem with Traditional AI in Combat Simulations

In wargaming and military simulations, agents have historically relied on rule-based scripted behaviors—predictable and reliable in static settings but rigid and ineffective against surprises.

Pure deep reinforcement learning agents shine in adaptability but struggle with:

- Black-box decisions (hard to explain or trust).

- Scalability in large-scale environments.

- Slow learning in high-stakes, sparse-reward scenarios.

This hybrid hierarchical AI bridges the gap by assigning roles strategically.

How the Hierarchical Hybrid Framework Works

The architecture features a two-tier structure tested in the Atlatl Simulation Environment (a lightweight yet effective combat sim from the Naval Postgraduate School):

- High-Level RL Manager Agent: Handles strategic decisions—prioritizing threats, allocating resources, and adapting to evolving battlefield conditions using deep RL for learning from experience.

- Low-Level Scripted Subordinate Agents: Execute tactical, routine actions (e.g., movement, basic engagements) with proven reliability and speed.

This setup leverages RL’s strength in high-level planning while relying on scripts for efficient, consistent execution—resulting in agents that are both smart and dependable.

(Conceptual diagrams of hierarchical AI structures in air and ground combat simulations, showing RL managers overseeing scripted agents.)

Key Results and Performance Gains

Experiments show the hybrid agents significantly outperform pure scripted or individual RL benchmarks:

- Higher overall scores in dynamic combat scenarios.

- Better adaptability to unforeseen threats.

- Improved scalability for larger simulations.

The approach provides a robust, explainable path forward for AI in defense simulations.

Broader Implications for Military AI and Wargaming

As reinforcement learning in military applications gains traction, this hybrid AI model could accelerate training for autonomous systems, enhance decision-support tools, and inform real-world strategies—all while maintaining human oversight through scripted reliability.

This work aligns with growing trends in AI for defense and simulation-based training.

(Examples of RL agents in simulated combat environments, including multi-agent confrontations and maneuver decisions.)

Read the full paper: arXiv 2512.00249 | PDF

Keep up with AI News for the latest in hybrid reinforcement learning, military AI simulations, and wargaming AI advancements. Will hierarchical hybrids become the standard for defense AI? Share your views below!

Keywords: hierarchical hybrid AI, deep reinforcement learning combat, scripted agents simulation, military wargaming AI, O-RAN reinforcement learning alternative, I/ITSEC 2025 AI, defense simulation hybrid models